How to Prevent AI Supply Chain Attacks: Securing Hallucinated Dependencies

Quick Summary: Key Takeaways

- The Threat: AI models often "hallucinate" code libraries that don't exist; attackers register these names to deliver malware.

- Verification is Mandatory: Never run

pip installornpm installon an AI suggestion without checking the repository first. - Typosquatting: GenAI makes typos; attackers exploit this by registering "slightly wrong" package names (e.g.,

reqestsvsrequests). - Private Registries: The only foolproof defense is using an internal artifact server that blocks public pull requests for unknown packages.

- Audit Tools: Automated scanners must be in place to catch these vulnerabilities before deployment.

Introduction: The "Ghost" Library Problem

In 2026, the most dangerous hacker in your system might be your own AI coding assistant.

Developers asking how to prevent AI supply chain attacks in code are often shocked to learn that LLMs like GPT-5 and Gemini can confidently invent software libraries that do not exist.

This phenomenon, known as "Package Hallucination," has created a massive security hole.

Note: This deep dive is part of our extensive guide on Best AI Mode Checker (2026): The Only 5 Tools That Actually Detect AI Code.

If a developer blindly installs a "ghost" package suggested by an AI, they might accidentally download malicious code that a hacker has registered under that exact name.

Below, we detail exactly how to lock down your repo against these invisible threats.

The Anatomy of an AI Supply Chain Attack

Why does this happen?

LLMs are trained on billions of lines of code. They understand patterns, not facts.

If an AI sees that React is often used with a library named router, it might suggest react-super-router-v6 simply because it sounds plausible.

The Attack Vector:

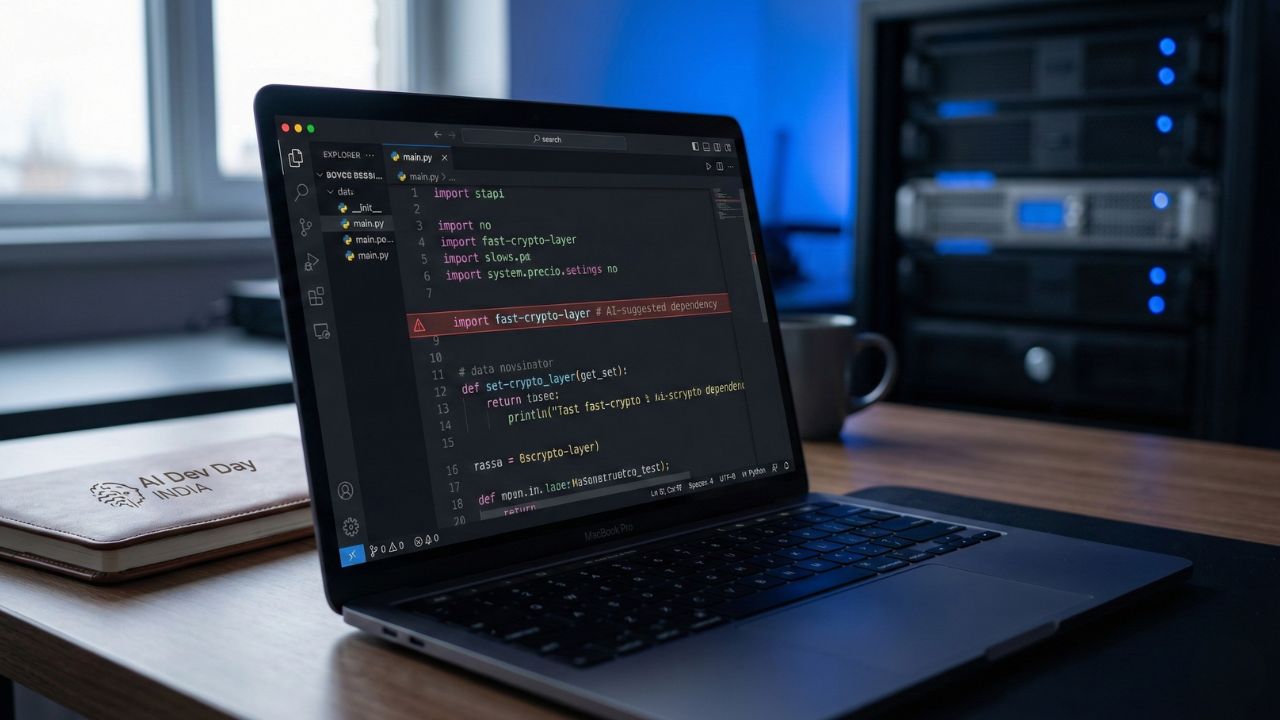

- Hallucination: The AI suggests

pip install fast-crypto-layer(a non-existent package) to a developer. - Registration: A hacker predicts this hallucination and registers

fast-crypto-layeron PyPI or npm. - Infection: The developer copies the command, installs the hacker's package, and their machine is compromised.

This is why cleaning technical documentation is just as important as cleaning code. For verifying the integrity of documentation and comments, we recommend using tools like the DeepSeek Detector.

Strategy 1: The "Trust but Verify" Protocol

To solve this, you must change your engineering culture. Speed is no longer the metric; provenance is.

The Verification Checklist:

- Check the Age: Is the package less than 30 days old? Flag it.

- Check the Maintainer: Does the GitHub profile look legitimate, or is it a ghost account?

- Check Download Stats: A real utility library won't have 5 downloads.

Strategy 2: Automating Package Validation

You cannot rely on human memory. You need CI/CD guardrails.

Effective measures include:

- Lockfiles: Strict adherence to

package-lock.jsonorpoetry.lock. - Scoped Registries: Only allow installation of packages from trusted namespaces (e.g.,

@google/cloud). - Typosquatting Scanners: Use tools that flag packages with names dangerously similar to popular libraries.

Strategy 3: Private Artifact Servers

For enterprise teams, the "Nuclear Option" is often the best.

By using a private artifact server (like Artifactory or AWS CodeArtifact), you create a "Walled Garden."

Developers cannot pull directly from the public internet. They can only install packages that have been whitelisted and cached in your private server.

This effectively kills the "hallucination attack" because the fake package won't exist in your private registry.

Conclusion

Knowing how to prevent AI supply chain attacks in code is the defining security skill of 2026.

As AI models get more creative, their hallucinations will become more convincing. You must treat every AI-generated import statement as a potential threat vector until proven otherwise.

Secure your supply chain today, or debug a breach tomorrow.

Frequently Asked Questions (FAQ)

It is a cyberattack where hackers exploit AI-generated code suggestions. They register the names of non-existent ("hallucinated") software libraries that AI models frequently suggest, waiting for developers to inadvertently install them.

Always check the Python Package Index (PyPI) manually before installing. Look for red flags: packages with no version history, zero stars on the linked GitHub repo, or generic descriptions that match the AI's prompt exactly.

Yes, advanced AI Code Integrity Checkers can analyze pull requests not just for syntax, but for suspicious imports. They can cross-reference imported libraries against a database of known safe packages.

Attackers use LLMs to generate thousands of potential typos for popular packages (e.g., numpuy instead of numpy). They then register these names, knowing that both humans and AI models often make typo errors.

Yes. Private hosting acts as a firewall. Since the "hallucinated" package exists only on the public internet (registered by the hacker) and not in your private server, the installation attempt will fail, protecting the developer.