How is Elo Calculated in LMSYS? The Secret Math Behind AI Leaderboards

Quick Answer: Key Takeaways

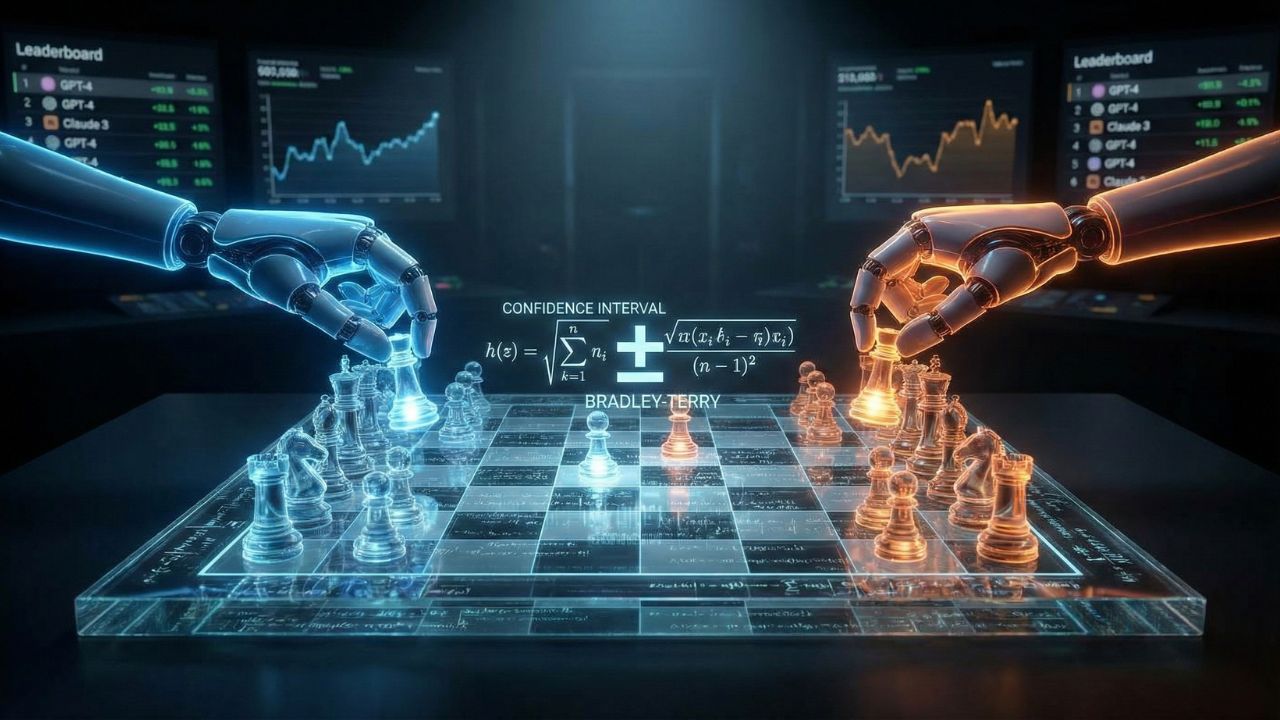

- The Foundation: LMSYS uses the Bradley-Terry statistical model, not the basic Chess Elo system, to predict win probabilities.

- The Uncertainty: The "±" value (Confidence Interval) is often more important than the score itself; it shows statistical stability.

- The Process: Scores are derived from thousands of blind, pairwise battles between models like GPT-4 and Claude 3.

- Bootstrapping: LMSYS uses a statistical technique called "bootstrapping" to re-sample data and ensure rankings aren't just luck.

- The Tie: Ties are weighted carefully; they don't just discard the data, but adjust the probability curve for both models.

Decoding the Scoreboard

You see a score of 1287 next to a new model, but how is Elo calculated lmsys style compared to a chess match?

If you don't understand the math, you are likely misinterpreting the leaderboard. A 5-point difference might look like a win, but statistically, it could be a tie.

This deep dive is part of our extensive guide on LMSYS Chatbot Arena Current Rankings: Why the Elo King Just Got Dethroned. We are peeling back the layers of the algorithm to show you why some rankings are rock solid and others are just noise.

It's Not Just Wins and Losses (The Bradley-Terry Model)

LMSYS doesn't use a simple " +1 for a win" system. They utilize the Bradley-Terry model, a probabilistic approach designed for pairwise comparisons.

Instead of just tracking victories, this model calculates the probability that Model A will beat Model B.

If a low-ranked model beats a high-ranked giant, it gains massive points. If a giant beats a novice, the score barely moves. This dynamic adjustment keeps the leaderboard accurate even when models have vastly different battle counts.

The "Confidence Interval" (±): The Most Ignored Stat

Next to every Elo score, there is a small number usually written as ±20. This is the Confidence Interval, and it is critical for accurate analysis.

If Model A is 1250 (±20) and Model B is 1240 (±20), they are technically overlapping. You cannot definitively say Model A is better.

This statistical overlap is why we see such tight competition in matchups like DeepSeek R1 vs GPT 5.1 Arena, where the scores are often within the margin of error.

Bootstrapping: Removing the Luck Factor

How does LMSYS ensure a lucky streak doesn't ruin the data? They use a technique called Bootstrapping.

This involves creating thousands of virtual datasets by re-sampling the original battles. By calculating the median Elo across these thousands of variations, LMSYS eliminates outliers.

This robust process is why the standard Arena scores are often more stable than specific sub-sets like those seen in our Arena Hard vs LMSYS Arena comparison.

Why Ties Matter More Than You Think?

In AI battles, models often refuse to answer or give equally good responses. LMSYS treats ties as a specific outcome that flattens the probability curve.

Tie-both-bad: Signals a difficult prompt or poor filtering.

Tie-both-good: Signals the models have reached a capability plateau.

Ignoring ties would artificially inflate the volatility of the rankings.

Conclusion: Trusting the Math

Understanding how is Elo calculated lmsys transforms the leaderboard from a simple list into a strategic tool. It prevents you from overreacting to minor score fluctuations.

The math proves that while rankings change, the statistical tiers remain the best way to choose your AI.

Frequently Asked Questions (FAQ)

LMSYS uses the Bradley-Terry model, which estimates the probability of Model A beating Model B based on their current rating difference. It uses maximum likelihood estimation (MLE) to derive the final scores from thousands of pairwise comparisons.

The confidence interval represents the range of uncertainty. If a model has a score of 1300 ± 20, its "true" skill level is 95% likely to fall between 1280 and 1320. Overlapping intervals indicate a statistical tie.

While LMSYS displays scores early, a model typically needs several hundred (often 500+) unique pairwise battles to narrow the confidence interval enough for a stable, reliable ranking.

Yes. Unlike the standard ELO system used in Chess (which assumes a logistic distribution), the Bradley-Terry model is specifically optimized for paired comparisons to predict the probability of one subject preferring item A over item B.

In the LMSYS system, a tie is treated as half a win and half a loss for both models. However, persistent ties between two models will draw their Elo ratings closer together over time, stabilizing their relative positions.

Sources & References

- LMSYS Org: Chatbot Arena Methodology – The official technical breakdown of the scoring system.

- arXiv: Chatbot Arena: An Open Platform for Evaluating LLMs – The academic paper detailing the bootstrapping process.

- LMSYS Chatbot Arena Current Rankings

- DeepSeek R1 vs GPT 5.1 Arena

- Arena Hard vs LMSYS Arena

External Resources:

Internal Deep Dives: