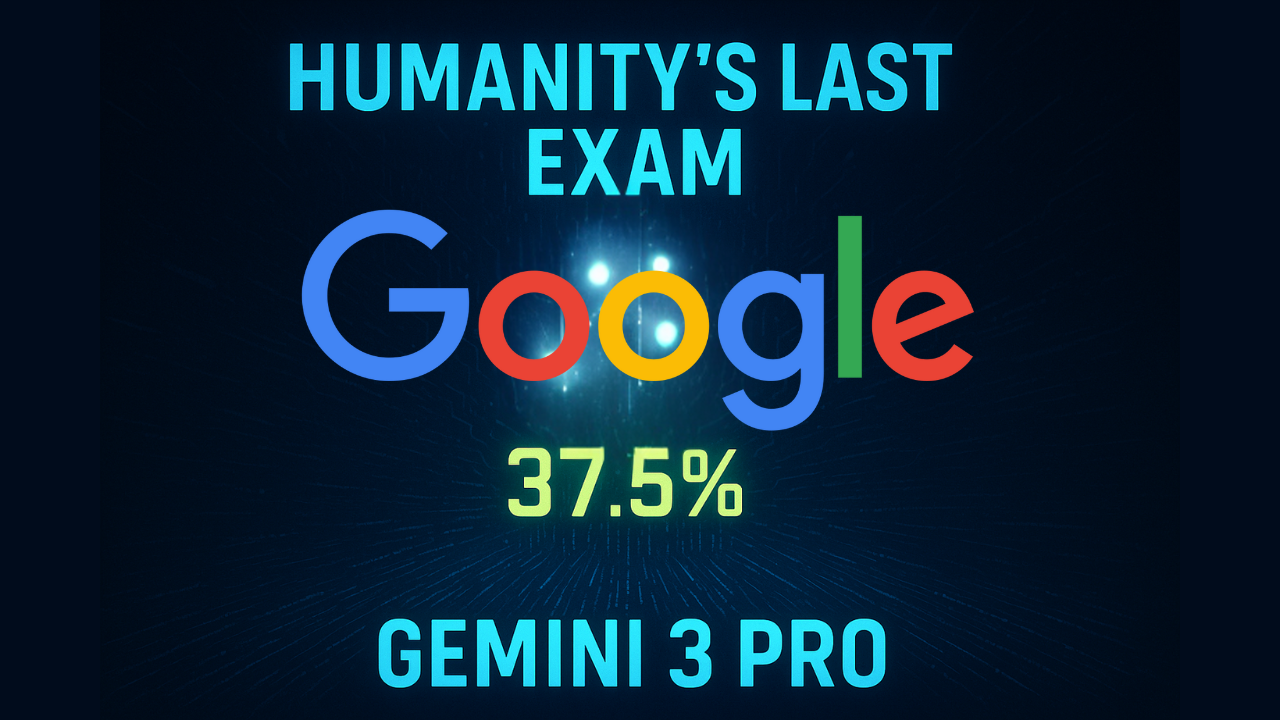

Gemini 3 Pro vs Humanity's Last Exam: Breaking the 37.5% Barrier

For months, the AI community has faced a measurement crisis. With top models achieving over 90% accuracy on established benchmarks like MMLU, the industry’s ability to gauge true progress was hitting a ceiling. In response, nearly 1,000 experts created "Humanity's Last Exam" (HLE), a test so difficult it was designed to stump today's best AI. Yet in a significant development, Google's Gemini 3 Pro has just set a new state-of-the-art score on this formidable benchmark. This release represents a major leap forward, redefining the performance ceiling for AI reasoning and setting a new standard for practical application through its immediate integration into critical developer tools like GitHub Copilot.

What is Gemini 3 Pro and Why Does It Matter?

Gemini 3 Pro is Google DeepMind's latest and most intelligent AI model, designed to execute sophisticated tasks through advanced reasoning and world-leading multimodal understanding. It builds upon the foundations of previous Gemini versions to deliver a system that represents a significant step forward in AI capabilities.

Its core features include:

- State-of-the-Art Reasoning: It provides responses with "unprecedented nuance and depth," moving beyond simple information retrieval to genuine insight.

- World-Leading Multimodal Understanding: The model seamlessly processes and synthesizes information across text, images, video, audio, and code.

- Agentic Coding: A key improvement is its enhanced ability to act as an agent by following complex instructions and calling tools reliably, described as "meaningful improved tool use."

- Vibe Coding: It introduces a more intuitive interface for front-end development, allowing users to create responsive experiences from prompts and sketches.

Shattering the Ceiling: Dominating "Humanity's Last Exam"

One of the most telling indicators of Gemini 3 Pro's advanced capabilities is its performance on Humanity's Last Exam (HLE), a benchmark designed to test the absolute frontier of AI knowledge and reasoning. Existing AI benchmarks have become saturated. As the abstract for the HLE paper on arXiv notes, leading LLMs now achieve "over 90% accuracy on popular benchmarks like MMLU, limiting informed measurement of state-of-the-art LLM capabilities." HLE was created to provide a new, more challenging test at the frontier of human knowledge. Gemini 3 Pro has established a new state-of-the-art score on this difficult benchmark, significantly outperforming its predecessor and key competitors.

Gemini 3 Pro’s State-of-the-Art Performance

| Model | Humanity's Last Exam (No tools) Score |

|---|---|

| Gemini 3 Pro | 37.5% |

| GPT-5.1 | 26.5% |

| Gemini 2.5 Pro | 21.6% |

While this score is a new record, the creators of the benchmark provide important context: "All frontier models achieve low accuracy on Humanity's Last Exam, highlighting significant room for improvement." This demonstrates both Gemini 3 Pro's lead and the immense difficulty of the challenges that advanced AI is now being tested against.

From Theory to Practice: Gemini 3 in the Wild

Beyond academic benchmarks, Gemini 3 Pro is already being deployed in professional tools, validating its practical value for complex, real-world tasks.

Powering the Next Generation of GitHub Copilot

Google has announced that Gemini 3 Pro is rolling out in public preview for GitHub Copilot, available for Pro, Pro+ Business, and Enterprise subscriptions. This integration brings the model's powerful coding and reasoning capabilities directly into the developer workflow. GitHub's own testing confirms the model's impact, stating: "In our early testing in VS Code, Gemini 3 Pro demonstrated 35% higher accuracy in resolving software engineering challenges than Gemini 2.5 Pro." This integration signals a broader industry trend where frontier models are no longer just API endpoints but are becoming deeply embedded co-pilots in professional workflows.

Industry Leaders Weigh In

Early access partners have reported significant gains across various industries, highlighting the model's versatility and power.

| Industry Leader | Quote |

|---|---|

| Michele Catasta, President & Head of AI, Replit | "Gemini 3 Pro truly stands out for its design capabilities, offering an unprecedented level of flexibility while creating apps. Like a skilled UI designer, it can range from well-organized wireframes to stunning high-fidelity prototypes.” |

| Joel Hron, CTO, Thomson Reuters | "Our early evaluations indicate that Gemini 3 is delivering state-of-the-art reasoning with depth and nuance. We have observed measurable and significant progress in both legal reasoning and complex contract understanding." |

The Science Behind the Leap in AI Reasoning

The performance gains in Gemini 3 Pro are built on years of dedicated AI research into how models reason and solve problems. While Google DeepMind has not detailed Gemini 3 Pro's precise architecture, its demonstrated performance in multi-step problem solving and factual consistency strongly suggests it leverages advancements built upon foundational techniques like Chain-of-Thought (CoT) and Retrieval-Augmented Generation (RAG). The arXiv paper "Advancing Reasoning in Large Language Models" outlines these key techniques.

- Chain-of-Thought (CoT) Reasoning: This technique improves accuracy by prompting the model to break down a problem into a "series of intermediate steps," mimicking human problem-solving.

- Retrieval-Augmented Generation (RAG): This is a critical architectural innovation that incorporates external knowledge sources into the model's response generation. This grounds the model's answers in factual data, which "helps mitigate hallucinations." [Image of Retrieval-Augmented Generation (RAG) framework]

Access and Pricing

Google is making Gemini 3 Pro accessible to developers through its API, with a clear pricing structure for production applications. The standard "Paid Tier" pricing for the Gemini 3 Pro Preview model is structured as follows for prompts up to 200,000 tokens:

| Service | Input Price (per 1M tokens) | Output Price (per 1M tokens) |

|---|---|---|

| Gemini 3 Pro Preview | $2.00 | $12.00 |

The Remaining Challenges and The Road Ahead

Despite these breakthroughs, the field of AI reasoning is not solved. Persistent challenges highlighted by researchers include:

- Hallucinations: Generating plausible but factually incorrect information.

- Generalization Across Domains: Transferring reasoning skills effectively from one subject to another.

- Robustness to Adversarial Attacks: Ensuring models are not easily misled by subtle changes in input prompts.

The release of Gemini 3 Pro marks a pivotal moment. Its state-of-the-art performance on a benchmark explicitly designed to measure the limits of AI reasoning confirms that the industry is making tangible progress on one of its hardest problems. Simultaneously, its rapid integration into a mission-critical tool like GitHub Copilot demonstrates that these advancements are no longer confined to the lab; they are creating immediate, practical value for professionals. However, the road ahead remains long. The very challenges that this generation of models still faces—hallucinations, brittle generalization, and vulnerability to adversarial prompts—are the fundamental barriers that separate today's advanced reasoning from true cognition. Gemini 3 Pro has raised the ceiling, but the quest for artificial general intelligence continues.

Review Our Comprehensive Guide: Google Gemini 3 Pro - Agentic Multimodal AI

Frequently Asked Questions (FAQs)

What is "agentic coding" and how does Gemini 3 Pro improve it?

"Agentic coding" refers to an AI's ability to act as an agent that can understand complex instructions, call external tools, and perform multi-step tasks. Gemini 3 Pro improves this with "meaningful improved tool use" and "exceptional instruction following," which are critical for building more helpful and intelligent AI assistants for coding.

Why was a new benchmark like "Humanity's Last Exam" created?

It was created because state-of-the-art LLMs were achieving over 90% accuracy on popular benchmarks like MMLU, making it difficult to measure further progress. A more challenging, multi-modal benchmark was needed to test the frontier of expert-level knowledge and reasoning.

How does Gemini 3 Pro's performance in competitive coding compare to other models?

On the LiveCodeBench Pro benchmark, which measures competitive coding ability, Gemini 3 Pro achieves an Elo rating of 2,439. This is significantly higher than its predecessor, Gemini 2.5 Pro (1,775), and its competitor, GPT-5.1 (2,243).

Sources and References:

- Gemini 3 - Google DeepMind

- Gemini 3 Pro is in public preview for GitHub Copilot

- Gemini Developer API pricing - Google AI for Developers

- Humanity's Last Exam

- Humanity's Last Exam - arXiv

- Advancing Reasoning in Large Language Models: Promising Methods and Approaches - arXiv

- AI in strategic business planning: Empirical evidence on decision-making and competitive outcomes - Computer Science Journals

Continue the Journey: Explore Our AI Hub

Dive deeper into the world of agentic AI, multimodal models, and development paradigms. Don't stop here, the revolution is now.

Go to Pillar Page