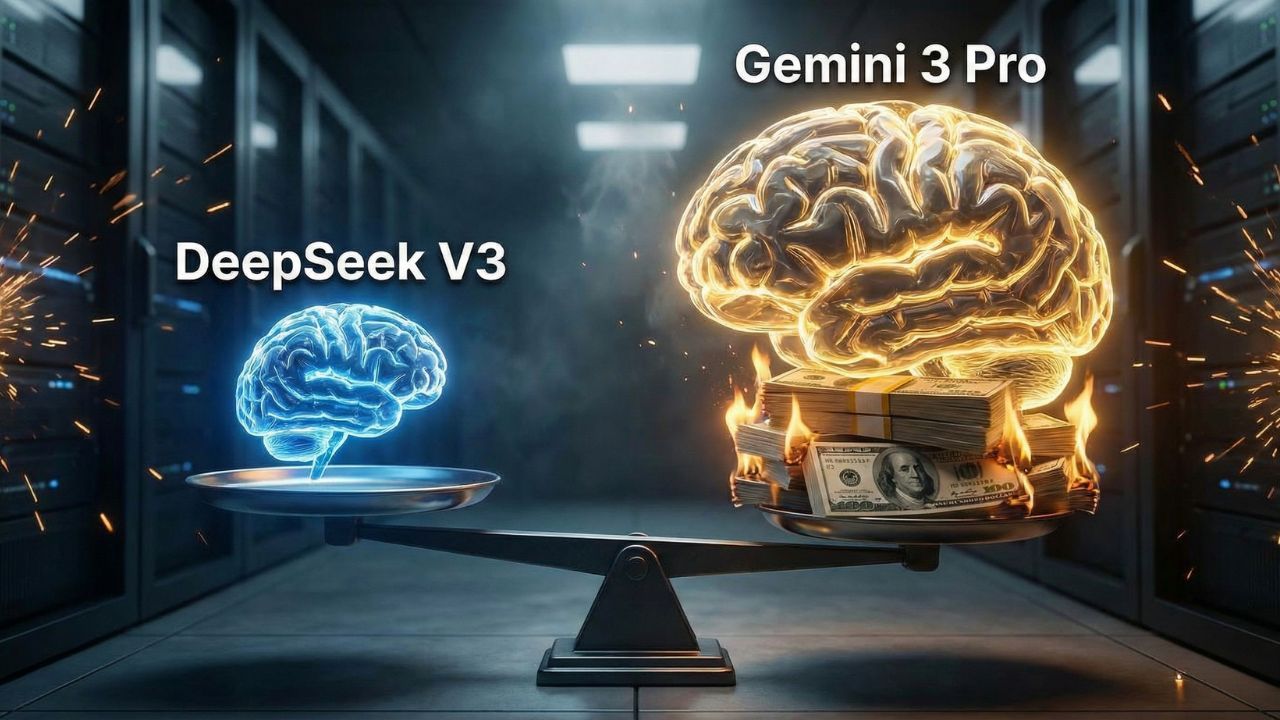

Cost Per Token vs Performance: Is Gemini 3 Pro Worth the 10x Price Tag?

Quick Answer: Key Takeaways

- The Price Gap: At ~$4.50 per million input tokens, Gemini 3 Pro is roughly 16x more expensive than DeepSeek V3 ($0.27/1M).

- The "SOTA Tax": You are paying a 1,000% premium for a roughly 5-10% gain in reasoning capability on standard benchmarks.

- The ROI Verdict: For 90% of routine coding and chatbot tasks, "SOTA" models are burning money.

- The Smart Strategy: The smart money is on "Good Enough" models for volume and "Smart" models for exceptions.

In 2026, intelligence is a commodity. But unlike electricity, where a kilowatt is a kilowatt, the price of "AI intelligence" varies wildly depending on the brand name.

CTOs are currently facing a dilemma: Do you pay the premium for the absolute best model on the leaderboard, or do you opt for a model that is 10x cheaper and "almost" as good?

This deep dive is part of our extensive guide on Interpreting LLM benchmark scores: Why "Humanity’s Last Exam" is Lying to You.

When to Pay Up: Only switch to Gemini 3 Pro or GPT-5 when you need massive context windows (1M+) or complex, multi-step reasoning that cheaper models fail at.

The Unit Economics of Intelligence

To understand cost per token vs performance, you have to look at the raw numbers.

As of January 2026, the pricing disparity is staggering:

- Gemini 3 Pro: ~$4.50 / 1M Input Tokens.

- GPT-5: ~$3.44 / 1M Input Tokens.

- DeepSeek V3: ~$0.27 / 1M Input Tokens.

The Math: You can run 16 million tokens through DeepSeek V3 for the price of 1 million tokens through Gemini 3 Pro.

Does Gemini 3 Pro provide 16x more value? For most applications, the answer is a hard no.

The Law of Diminishing Returns

We are seeing a classic "S-Curve" in AI performance. The difference between a "dumb" model and a "smart" model used to be huge.

Now, the gap between the #1 model and the #10 model on the leaderboard is often less than 4 percentage points on benchmarks like MMLU or HumanEval.

The "Good Enough" Threshold: For tasks like summarizing emails, writing basic Python scripts, or classifying customer support tickets, DeepSeek V3 or Llama 4 (Scout) performs at near-parity with the giants.

Paying the Gemini 3 Pro premium for these tasks is like commuting to work in a Formula 1 car. It’s impressive, but it’s a waste of resources.

DeepSeek V3: The "Budget King" of 2026

The market disruption of 2025/2026 wasn't a new capability; it was a price war.

DeepSeek V3 proved that you could achieve GPT-4 class performance at a fraction of the training and inference cost.

By optimizing their Mixture-of-Experts (MoE) architecture, they forced giants like OpenAI and Google to reconsider their pricing strategies.

If you are building a high-volume application (e.g., a real-time coding agent), using a model like DeepSeek isn't just "cheaper", it's the only way to make your unit economics work.

When Is the Premium Worth It?

So, is Gemini 3 Pro worthless? Absolutely not. You pay the "10x tax" for two specific things.

Massive Context: Gemini 3 Pro’s 1M+ token context window is still unmatched for reliability. If you need to feed an entire codebase or a 500-page legal discovery document into a prompt, DeepSeek (often capped at 128k) simply cannot do the job.

Reasoning Reliability: In our tests, while cheaper models can code, they often get stuck in "logic loops" on complex architectural problems. Gemini 3 Pro is less prone to these errors.

The Hybrid Strategy: Smart teams use a router. Route 90% of simple queries to DeepSeek/Llama. Route the hardest 10% (or those requiring massive context) to Gemini 3 Pro.

This allows you to avoid paying for inflated benchmark scores while still having access to SOTA intelligence when it counts.

Conclusion: Don't Buy the Hype, Buy the ROI

The cost per token vs performance analysis for 2026 is clear: The top of the leaderboard is overpriced for general use.

Unless you specifically need "Humanity's Last Exam" level reasoning or million-token context, stick to the efficiency models. Your CFO will thank you.

Frequently Asked Questions (FAQ)

Only for specific use cases. If you require massive context windows (1M+ tokens) or highly complex reasoning for critical tasks, yes. For general text generation or basic coding, it is not cost-effective compared to DeepSeek V3.

Calculate your "Cost Per Task" rather than Cost Per Token. If a cheap model fails 20% of the time and requires re-prompting, its effective cost doubles. Compare the total cost to get a correct answer between models.

DeepSeek V3 and Llama 4 (Scout/Maverick) are currently the value leaders, offering GPT-4 class coding capabilities at roughly $0.27-$0.30 per million tokens.

Llama 4 (open weights) allows you to self-host or use cheap API providers, making it significantly cheaper at scale. GPT-5 offers higher "out of the box" reasoning but at a ~10x price premium.

Switch when the "maintenance cost" of the open model (prompt engineering, error handling, self-hosting infra) exceeds the API cost of the paid model. Often, paid APIs are cheaper for low-volume startups due to zero overhead.

Sources & References

-

External Market Data:

- LLM-Stats.com : DeepSeek-V3 vs GPT-4o Benchmarks & Pricing (Jan 2026).

- Artificial Analysis : AI Model Leaderboard & Pricing Analysis 2026.

- Epoch AI : LLM Inference Price Trends & Diminishing Returns. Internal Resources:

- Interpreting LLM Benchmark Scores: The Developer’s Guide

- Why AI Benchmarks Are Fake: Inside the "Data Contamination" Scandal