Interpreting LLM Benchmark Scores: Why "Humanity’s Last Exam" is Lying to You

Quick Summary: Key Takeaways

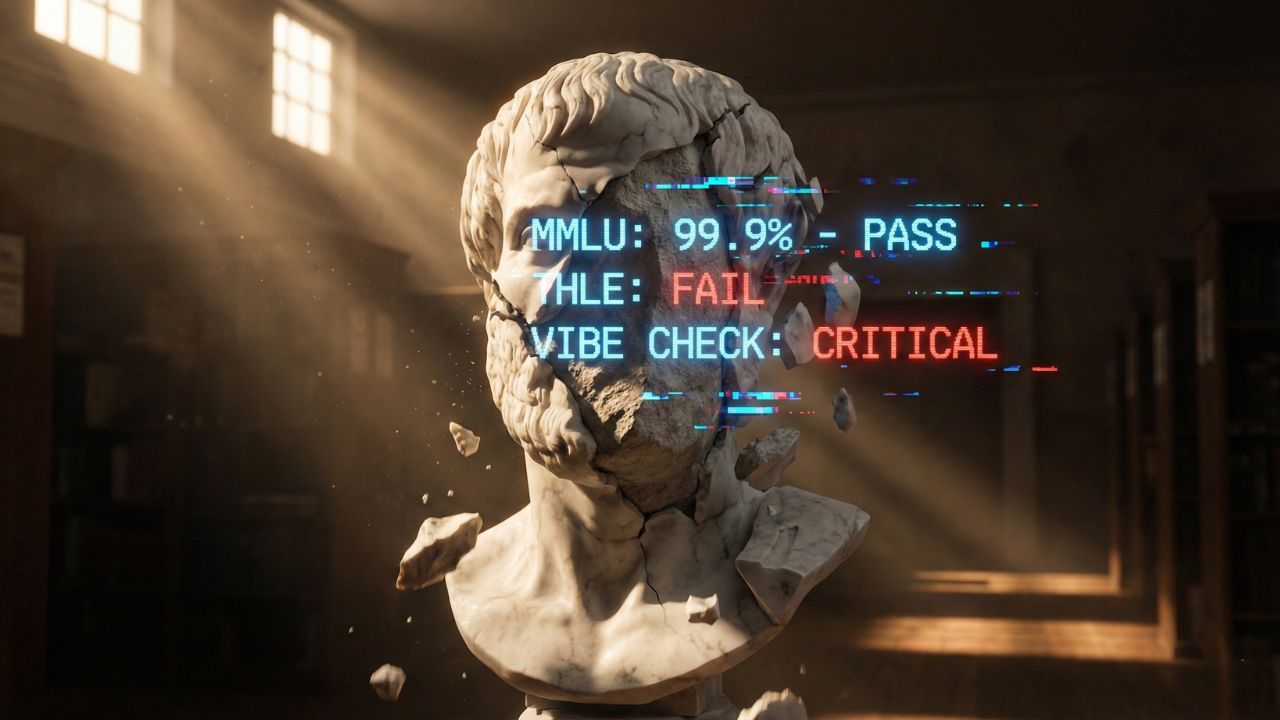

- Scores Are Deceptive: High benchmark scores often indicate memorization, not reasoning capabilities.

- The "HLE" Standard: "Humanity's Last Exam" is the new, brutally difficult test designed to break current AI models.

- Contamination is Rampant: Many models have already "seen" the test questions during training, making their scores meaningless.

- Vibe Checks: Developers are moving away from static charts and trusting "Vibe Checks" for real-world utility.

- Cost vs. IQ: A higher score on a chart doesn't always justify paying 10x more per token.

Interpreting LLM benchmark scores used to be simple: the higher the number, the smarter the model. But if you have ever tried to run a "state-of-the-art" model that scored 90% on MMLU only to watch it fail a basic Python loop, you know that the leaderboards are broken.

We need to fundamentally change how we evaluate Artificial Intelligence. Understanding interpreting LLM benchmark scores is critical for anyone building or deploying AI today.

The Great MMLU Illusion

For years, the Massive Multitask Language Understanding (MMLU) benchmark was the gold standard. It covers everything from elementary math to professional law.

But in 2026, MMLU is effectively "solved." When every major model scores between 85% and 90%, the metric loses its value.

It’s like measuring the height of NBA players, they are all tall, but that doesn't tell you who can shoot a three-pointer. Worse, many of these models aren't actually "smarter." They are just good at taking the test.

If you suspect something fishy is going on, you aren't alone. We did a deep dive into the scandal of data contamination to explain why AI benchmarks are fake.

Enter "Humanity’s Last Exam" (HLE)

Because models broke the old tests, researchers built a new one. "Humanity’s Last Exam" (HLE) was designed to be impossible for current LLMs to memorize.

It doesn't ask multiple-choice questions about history. It asks for complex, multi-step reasoning that requires genuine understanding. If MMLU is a high school pop quiz, HLE is a doctoral thesis defense.

When a model like Gemini 3 Pro scores poorly on HLE, it’s not failing. It’s finally being honest.

Coding: The Benchmark vs. Reality Gap

The biggest disconnect happens in software development. A model might score 95% on "HumanEval," but can it refactor a 500-line legacy codebase without hallucinating?

Usually, the answer is no. Benchmarks test snippet generation. They rarely test architectural logic or long-context debugging.

We decided to ignore the charts and run a real-world Gemini 3 Pro vs GPT-5 coding test to see which one actually ships usable code. The results proved that "exam smarts" don't always translate to "street smarts."

Interpreting LLM Benchmark Scores Using the "Vibe Check"

If the numbers are unreliable, what should a developer trust? Enter the "Vibe Check." This isn't scientific, but it’s practical. It involves testing a model on your specific edge cases, the weird, messy problems that don't appear in clean datasets.

Top engineers are increasingly prioritizing how a model "feels" to use over its raw score. Does it follow instructions? Is it lazy? Does it refuse to code?

This cultural shift is changing how we evaluate AI. Learn more about the battle of vibe coding vs benchmarks and why intuition is becoming a valid metric.

The ROI of Intelligence

Finally, you have to ask: Is the extra intelligence worth the price? A model might be 2% better on a benchmark but cost 500% more to run.

For enterprise applications, that math rarely adds up. Unless you are doing cutting-edge research, "good enough" is often the smarter financial choice.

Before you commit to an expensive API, you need to analyze the cost per token vs performance to ensure you aren't burning cash for vanity metrics.

Final Verdict

The era of blind trust in leaderboards is over. Interpreting LLM benchmark scores in 2026 requires skepticism. Look for independent audits, test for your specific use case, and never assume a high number equals high competence.

Frequently Asked Questions (FAQ)

A high MMLU score generally indicates broad knowledge and reasoning ability, but it does not directly correlate to coding proficiency. MMLU tests general topics (like law or history), whereas coding requires specific syntax handling and logic structure, which are better measured by tests like HumanEval or SWE-bench.

HLE is a next-generation benchmark designed to be "contamination-proof." It focuses on novel, complex reasoning tasks that cannot be solved by memorizing training data. It is difficult because it forces models to generalize and think abstractly, rather than just pattern-match.

This discrepancy is often due to "data contamination" (the model saw the test questions during training) or "overfitting" (the model was optimized specifically to pass the test). Real-life tasks are messy and unstructured, unlike the clean, isolated environment of a benchmark.

Don't rely on a single aggregate score. Look at specific sub-scores relevant to your needs (e.g., "Math" or "Code"). Better yet, ignore the scores and run a practical test on a task you actually perform daily to see which model handles your specific context better.

Generally, no. Most static benchmarks are considered saturated or unreliable due to gaming and contamination. Trust independent, third-party evaluations (like Chatbot Arena) or dynamic benchmarks like HLE over the self-reported scores from AI companies.