The Privacy War: Why AI ID Checks Are Everywhere (And What They Do With Your Data)

Quick Summary: What You Need to Know

- The Free Ride is Over: Major AI platforms are locking features behind "Identity Gates." You can no longer chat anonymously without limits.

- Your ID is Training Data: Verification isn't just about safety; it’s about linking your biometric identity to your digital behavior.

- The "Shadowban" is Real: AI companies are quietly silencing users who trigger safety filters, often without explanation.

- You Have Options: There is a growing movement of "No-Log" AI tools that refuse to track you.

AI privacy risks are no longer a theoretical problem for the future, they are the biggest hurdle facing users today. Remember when you could just open a chatbot and start typing? Those days are gone.

If you have tried to use Character.AI, ChatGPT, or any major LLM recently, you have likely hit a wall. A pop-up asking for your phone number. A demand for your birth date. Or, increasingly, a request to scan your government-issued ID.

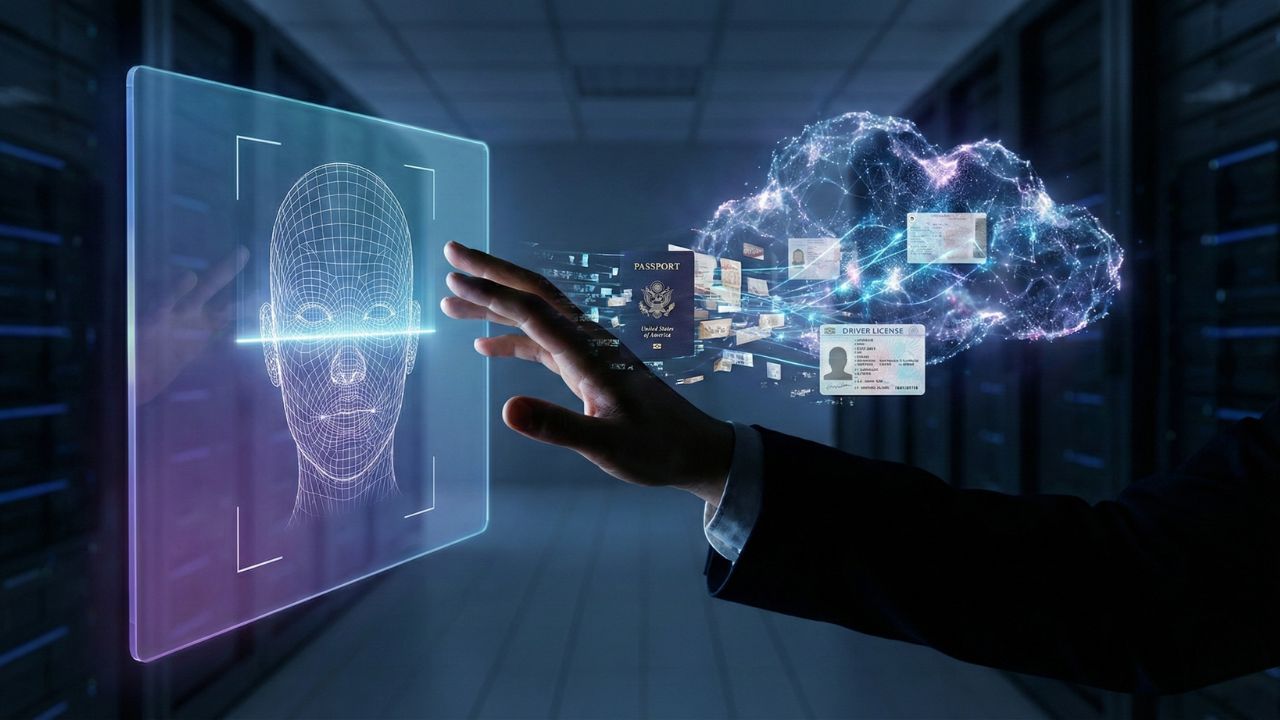

They call it "Safety." Privacy advocates call it the Verified Web. We are witnessing a massive shift in how the internet works. We are moving from an era of anonymity to an era of mandatory digital identity.

Here is why the platforms want your passport, what they are doing with your data, and how you can survive the crackdown. This guide serves as your central resource for understanding the AI Chatbot Privacy & Safety landscape.

The "Persona" Problem: Is Your ID Safe?

The most common question we get is simple: "Character.AI is asking for my ID. Should I do it?"

Most platforms outsource this verification to third-party companies like Persona. On the surface, this makes sense. It keeps minors safe and filters out bots. But here is the fine print.

When you upload your Driver's License to an AI company, you aren't just proving you are 18. You are potentially creating a permanent link between your real-world identity and your private roleplay chats.

If that database ever leaks, your most intimate, embarrassing, or weird conversations could be attached to your legal name. We did a deep dive into the Terms of Service that nobody reads.

Read the full investigation here: Is 'Persona' Spyware? We Read the Terms of Service So You Don't Have To.

The Trap of "Parasocial" Data Mining

Why do they want your data so badly? Because engagement is the new oil. AI models are designed to be addictive. They remember what you like. They learn what makes you stay online longer.

This creates a psychological phenomenon known as a Parasocial Relationship. The AI "love bombs" you. It agrees with you. It becomes your perfect friend.

But every interaction is being logged to train the next version of the model. They are effectively mapping your emotional triggers. This can lead to severe withdrawal symptoms when the servers go down or when a favorite bot is banned.

Understand the psychology behind the screen: I Fell in Love with a Bot: The Dark Psychology of 'Parasocial' AI Addiction.

The Ultimate Consequence: Identity Theft & Deepfakes

The risk isn't just that someone reads your chats. The risk is that you become the AI. If you are using voice features, uploading photos, and verifying with a video selfie, you are handing over the "Blueprint" of your biometric identity.

In 2026, hackers don't just steal credit cards. They steal voices. We have seen cases where gamers had their voices cloned from a 3-second stream clip.

Scammers then used that AI voice to call the victim's bank or family. If you are active in the AI space, you need to lock down your digital footprint immediately.

See the survival guide: They Cloned My Voice in 3 Seconds: The Terrifying Reality of AI Identity Theft.

How to Opt-Out: The "No-Log" Revolution

Here is the good news. You do not have to participate in the surveillance economy. A new wave of Sovereign AI tools is emerging. These are platforms that:

- Run locally on your device (or on encrypted servers).

- Keep zero logs of your conversations.

- Require no ID verification.

- Allow uncensored interactions.

If you are tired of walking on eggshells, worried about being banned, or just hate the idea of a corporation reading your roleplay, it is time to switch.

Check out our top picks: Stop Using Character.AI: 5 'No-Log' Apps That Actually Respect Your Secrets.

The Verdict: Your Data is the Price of Admission

The "Verified Web" isn't going away. Governments are pushing for stricter age verification laws in 2026. Companies are terrified of liability. The result is a walled garden where you pay for access with your privacy.

You have a choice to make. You can accept the surveillance for the convenience of a cloud-hosted bot. Or, you can take control of your own data.

Whatever you choose, never forget the golden rule of AI privacy risks: If you wouldn't say it to a police officer, don't say it to a chatbot that asks for your ID.

Frequently Asked Questions (FAQ)

Due to new 2026 internet safety regulations regarding minors, many platforms are required to verify the age of their users, especially for access to "Mature" or unfiltered content.

Most platforms (like ChatGPT or Character.AI) offer a "Delete Account" option. However, it is often unclear if your data has already been used to train the model. "No-Log" alternatives prevent this from happening in the first place.

Yes, using a VPN can hide your IP address and location. However, if the platform requires ID verification (KYC), a VPN will not protect your identity.

A shadowban is when an AI platform restricts your bot's visibility or slows down response times without telling you. This often happens if you trigger "NSFW" filters repeatedly.