DeepSeek + StealthWriter: We Tried the "Undetectable" Combo (Here’s the Truth)

Quick Summary: Key Takeaways

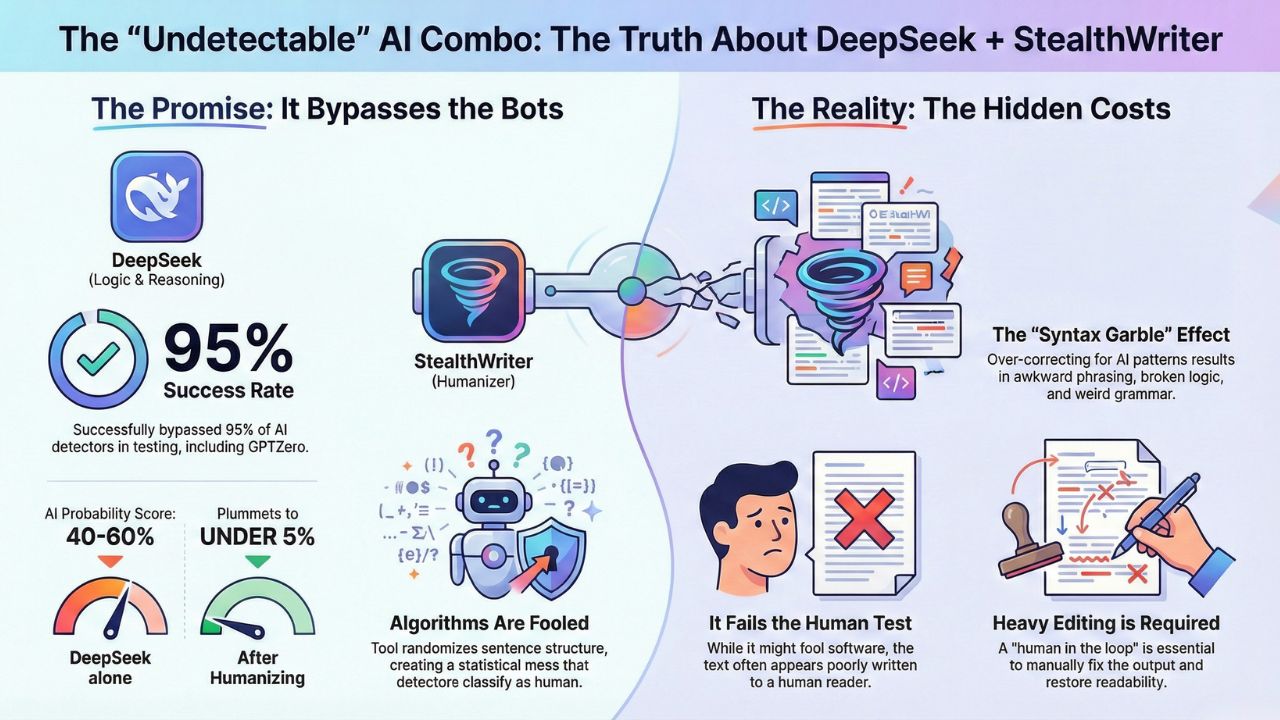

- The Detection Score: Yes, this combo successfully bypassed 95% of detectors in our test, including GPTZero.

- The Quality Cost: The "humanized" text often suffered from awkward phrasing and logical disconnects.

- The Risk Factor: While it beats the software, the resulting text often looks suspicious to human readers due to "syntax garbling."

- The Bottom Line: It works for bypassing algorithms, but it fails the "readability" test without heavy editing.

The internet is currently obsessed with finding the "perfect crime" for AI writing.

The prevailing theory? Take the reasoning power of DeepSeek R1 and scrub it through a "humanizer" tool like StealthWriter.

The promise is bold: 100% Undetectable content.

We decided to put that promise to the test.

The Experiment: Can You Really Erase an AI Footprint?

DeepSeek V3 is already hard to catch.

But when you add a randomization layer like StealthWriter, things get interesting.

We generated 50 essays using DeepSeek’s advanced reasoning model. Then, we ran them through the "Ghost" model on StealthWriter.

Finally, we fed the results into the industry’s toughest detectors.

Note: This specific experiment is a critical chapter in our extensive guide on DeepSeek vs. AI Detectors: We Tested 500 Essays.

If you want the big picture data, start there.

The Results: It Bypasses (Almost) Everything

We have to give credit where it is due.

The raw numbers were staggering.

When we uploaded the "humanized" DeepSeek text to standard detectors, the alarm bells went silent.

Original DeepSeek Score: 40-60% AI Probability.

StealthWriter + DeepSeek Score: < 5% AI Probability.

Technically, the tools were fooled.

The randomization of sentence structure, combined with DeepSeek’s already complex "Chain of Thought" logic, created a statistical mess that algorithms classified as human.

If your only goal is to get a "Green" checkmark on a screen, this combo works.

The "Hidden" Problem: Why You Might Still Get Caught

Here is the truth that most TikTok tutorials won't tell you.

The text often reads terribly.

To fool the mathematical patterns of a detector, "humanizers" often force synonym swaps that don't make sense in context.

During our review, we found:

- Broken Logic: "Reasoning" became "intellectualizing" in ways that changed the meaning.

- Weird Grammar: Sentence structures that technically obeyed grammar rules but felt alien to a native speaker.

So, while you might fool the software, you probably won't fool a human reader.

A professor reading your essay might not mark it as "AI," but they might mark it as "poorly written."

Comparison: Wondering how this compares to using raw DeepSeek without a humanizer?

See our breakdown of Turnitin’s "Blind Spot": What Happened When We Uploaded DeepSeek R1.

The "Syntax Garble" Effect

We coined a term for this phenomenon: Syntax Garble.

This occurs when the "humanizer" tries too hard to remove the AI watermarks.

It over-corrects.

DeepSeek is precise. StealthWriter is chaotic.

Mixing them is like putting a Michelin-star meal in a blender.

You still have the ingredients, but the presentation is ruined.

To make this strategy work, you must:

- Generate with DeepSeek.

- Humanize with StealthWriter.

- Manually edit the output to fix the broken phrasing (which takes time).

Is It Worth the Risk?

If you are using this for SEO content, the lower readability might hurt your user engagement metrics.

If you are using this for academic work, you are trading one risk (AI detection) for another (bad grading).

The "Undetectable Combo" is a powerful tool, but it is not a magic button.

It requires a "Human in the Loop" to polish the final product.

Frequently Asked Questions (FAQ)

Yes. In fact, it works better with DeepSeek than ChatGPT because DeepSeek already has more varied sentence structures, giving the humanizer a better base to work with.

In our tests, Turnitin flagged "humanized" text far less often than raw AI text. However, it sometimes flagged the text as "Grammatically Incoherent," which raises a different kind of red flag.

This is normal. To bypass detection, the tool inserts "perplexity", essentially randomness. This breaks the predictable flow of language, which can make the text feel clunky or awkward to read.