Video SEO 2026: Ranking in Sora, Veo, and YouTube AI

Text is no longer enough. The 2026 guide to "Video GEO." Learn to structure metadata and scripts to rank in Google Veo, OpenAI Sora, and YouTube's AI search algorithms.

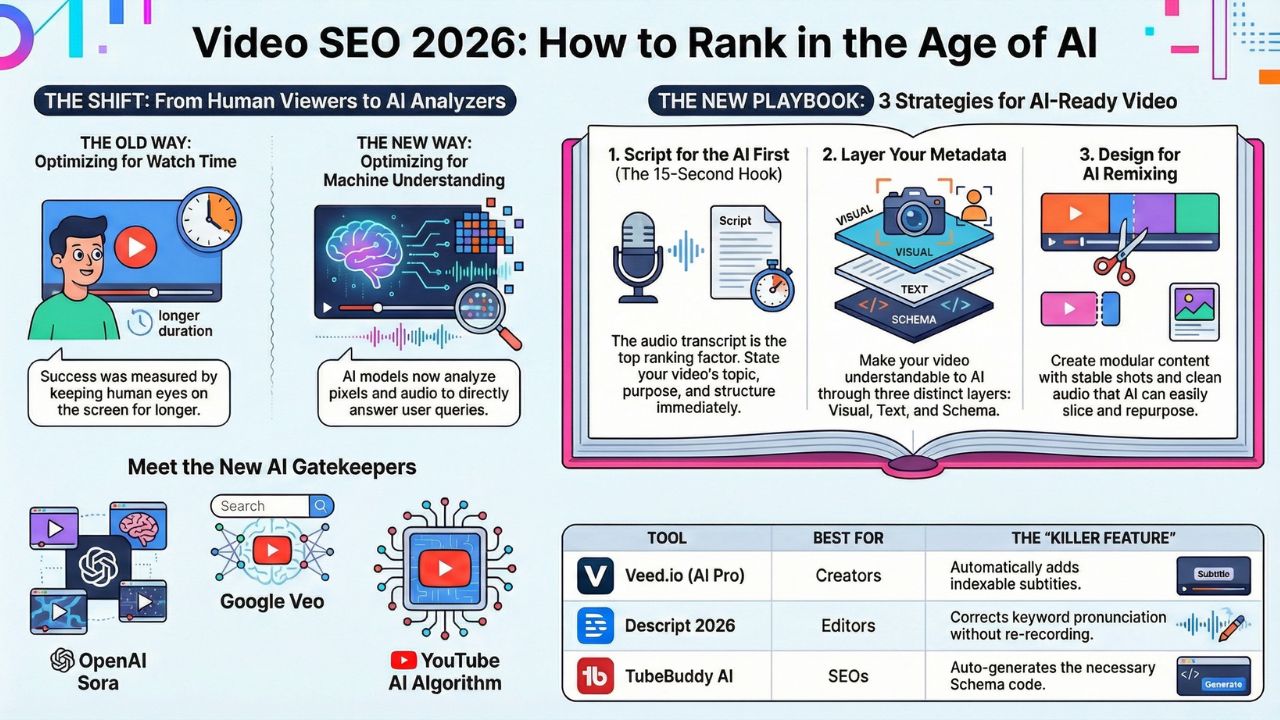

The End of "Watch Time" as the Only Metric

For the last 15 years, YouTube had one god: Watch Time. If you kept eyes on the screen, the algorithm rewarded you. In 2026, that god is dead.

A new breed of search engine has arrived. When a user asks OpenAI Sora or Google Veo to "Show me a video of how to fix a leaking tap," the AI doesn't just look for a video title. It looks at the pixels. It listens to the audio. It understands the scene.

This is Generative Video Optimization (Video GEO). If your video content isn't optimized for these Large Vision Models (LVMs), you are invisible. You might have the best content, but if the AI can't "see" it, you don't exist.

This guide is your blueprint to surviving the shift from "Recommended Videos" to "AI-Generated Answers."

Explore Generative Engine Optimization (GEO) 2026 Hub

- Generative Engine Optimization (GEO) 2026: The Ultimate Guide to Ranking in AI Search

- Audit Your Brand on AI: How to Control What ChatGPT Says About You

- Build a Marketing Agent Swarm: CrewAI Tutorial 2026

- Zero-Click Content Strategy: How to Monetize Without Traffic

- Best AI Marketing Tools 2026: The Agentic Stack (Review)

- GEO in India: Optimizing for 'Hinglish' and Voice Search

The New Landscape: Sora, Veo, and The "Smart" YouTube

To win, you must understand the three gatekeepers of video traffic in 2026:

- OpenAI Sora: Not just a video generator, but a visual search engine. It constructs answers by analyzing millions of video frames.

- Google Veo: The visual brain of Google Search. It powers the "Video AI" snippets that appear above standard search results.

- YouTube AI Algorithm 2026: It no longer relies solely on tags. It uses "Multi-Modal Understanding" to transcribe your audio, identify objects in your video (e.g., recognizing a specific brand of coffee maker), and index them instantly.

Ranking here requires a fundamentally different strategy. You need to speak to the machine.

Strategy 1: The "Script-to-Rank" Formula

The most critical ranking factor in 2026 is your audio transcript. AI models transcribe video faster than they process pixels. They prioritize the first 15-30 seconds of audio to determine "Entity Relevance." If you bury the lead, the AI classifies your video as "Low Confidence" and moves on.

We have developed the "Script-to-Rank" Formula to ensure high Sora video SEO performance.

Template: The 15-Second AI Hook

Use this structure for the first 3 sentences of every video script.

- 0:00 - 0:05 (The Entity Declaration): "In this video, we are reviewing the [Specific Product Name/Entity], specifically testing its [Specific Feature A] and [Specific Feature B]."

Why: Signals immediate entity relevance to the Knowledge Graph. - 0:05 - 0:10 (The Intent Match): "If you are looking to [Solve Specific Problem] or [Achieve Specific Outcome], this workflow is designed for [Target Audience]."

Why: Aligns with the user's prompt intent (e.g., "How to fix..."). - 0:10 - 0:15 (The Structural Map): "We will cover three steps: [Step 1 Keyword], [Step 2 Keyword], and the final verdict on [Primary Keyword]."

Why: Tells the AI exactly how to segment the video into chapters.

Pro Tip: Enunciate these keywords clearly. Optimize video for LLMs by avoiding slang or vague metaphors in the opening seconds.

Strategy 2: AI Video Metadata Strategy (The "Invisible" Text)

In the old days, you uploaded a video and added a title. Today, AI video metadata strategy involves layers of data that help the AI "hallucinate" your video into the search results. You must optimize three distinct layers:

The Visual Layer (Pixel Semantics)

Google Veo analyzes the visual feed. If your video is about "Coding Python," make sure Python code is clearly visible on the screen. If you are reviewing a car, ensure the logo is centered. Visual clarity is now a ranking signal.

The Text Layer (Context Windows)

Don't just write a description. Paste your entire transcript into the description field or a pinned comment. This feeds the "Context Window" of the LLM, giving it more tokens to associate with your video.

The Schema Layer (Structured Data)

You cannot rely on platforms to do this for you. You must inject video schema markup 2026 onto the webpage where the video is embedded.

Code Snippet: The 2026 VideoObject Schema

Your developer needs to add this JSON-LD to your page. Note the new hasPart property, which defines "Key Moments" for the AI.

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "VideoObject",

"name": "How to Build AI Agents with Python",

"description": "A comprehensive guide to coding autonomous agents...",

"thumbnailUrl": "https://example.com/thumbnail.jpg",

"uploadDate": "2026-02-21T08:00:00+08:00",

"transcript": "Full text of the video goes here for the AI to read...",

"hasPart": [

{

"@type": "Clip",

"name": "Installing Libraries",

"startOffset": 30,

"endOffset": 120,

"url": "https://example.com/video?t=30"

},

{

"@type": "Clip",

"name": "Writing the Agent Logic",

"startOffset": 121,

"endOffset": 300,

"url": "https://example.com/video?t=121"

}

]

}

</script>Strategy 3: Ranking in "Generative" Results (Veo & Sora)

Veo search ranking is unique. Google Veo may generate a new video clip by splicing together parts of your video with others. To be included in this "Super-Cut," your content must be "Modular."

- Avoid Long Intros: Get to the point immediately.

- Visual Stability: Use a tripod. AI struggles to interpret shaky footage.

- Clean Audio: Background noise confuses the transcription engine.

Think of your video not as a movie, but as a database of clips that the AI can remix.

Tool Review: Auto-Generating "AI-Readable Metadata"

You don't have to do this manually. The "Builder" market has exploded with tools that automate generative video optimization. Here is our head-to-head review.

| Tool | Best For | The "Killer Feature" |

|---|---|---|

| Veed.io (AI Pro) | Creators | Automatically removes silence and adds subtitles that are indexable. |

| Descript 2026 | Editors | "Overdub" feature lets you fix keyword pronunciation without re-recording. |

| VidIQ Max | Strategists | Predicts which "Entity Keywords" are trending on YouTube's AI before you record. |

| TubeBuddy AI | SEOs | Auto-generates the JSON-LD Schema code based on your video content. |

Verdict: If you are a solo creator, start with Descript. If you are an Enterprise Media Company, you need the schema power of TubeBuddy AI.

Summary: Adapt or Disappear

The era of "upload and pray" is over. Sora video SEO and the YouTube AI algorithm 2026 demand precision. You must write for the ear (the transcript), film for the eye (the pixel analysis), and code for the brain (the schema). The creators who master this Script-to-Rank formula won't just get views; they will become the primary source material for the AI answers of the future.

Frequently Asked Questions (FAQ)

Not at all. It simply fronts-loads the context. You can still be creative, but only after you have told the AI exactly what the video is about in the first 15 seconds. Think of it as a "digital handshake" with the algorithm.

YouTube Search lists videos. Google Veo answers questions using video. Veo might show the user a specific 10-second clip from the middle of your video that perfectly answers "how to reset a router," without the user ever visiting your channel.

Yes. While YouTube does some indexing, hosting the video on your own blog with video schema markup 2026 gives you a "double dip" opportunity. You can rank the YouTube URL and your blog URL in the same AI search result.

Yes. You cannot change the video file, but you can update the AI video metadata strategy. Go back and rewrite titles, descriptions, and pinned comments using "Entity Keywords," and add the JSON-LD schema to your website embeds.

Generative video optimization is the practice of creating video content that is easily understood, sliced, and remixed by Generative AI models. It focuses on clarity, modularity, and strong metadata signals.

Sources and References

- Google Veo: Google's generative video model and search technology.

- OpenAI Sora: The leading text-to-video model that is influencing search behaviors.

- Schema.org (VideoObject): The official documentation for video structured data.

- Descript: An AI-powered video editing tool referenced in the tool review.

- VidIQ: An analytics tool for YouTube optimization.