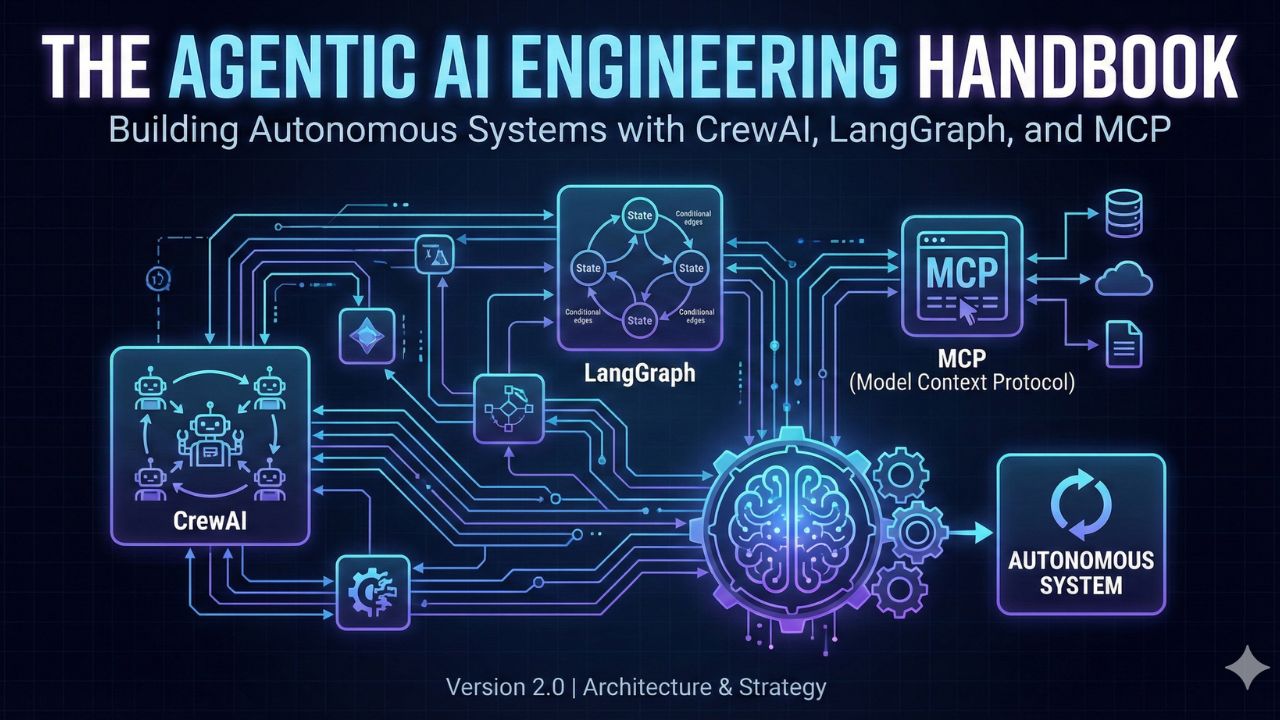

Agentic AI Architecture: The Engineering Handbook

- Shift from Passive to Active: Learn why we are moving from Generative AI to Agentic AI Architecture.

- Framework Wars: A definitive guide to choosing between CrewAI vs LangGraph for enterprise systems.

- 8 System Blueprints: Production-ready architectures for Financial Intelligence, DevOps Squads, and Personal Digital Twins.

Version: 2.0 (Architecture Edition)

Target Audience: Solution Architects, Engineering Leads, and Technical Founders

Focus: System Design, AI Agent Orchestration, and Strategic Implementation

1. Introduction: The Blueprint for Autonomy

From "Prompting" to "Orchestration"

The era of Generative AI—using LLMs to merely write emails or poems—is ending. The era of Agentic AI has begun.

For engineering leaders, this shift is fundamental. We are no longer building tools that assist humans; we are architecting systems that act like them. These Autonomous AI Agents plan, execute, debug, and collaborate to solve complex problems without constant human oversight.

What is Agentic AI? Unlike passive models, these systems possess agency. This handbook is not a collection of code snippets. It is a rigorous Agentic AI Architecture roadmap designed to take you from a "Prompt Engineer" to an AI Systems Architect.

You will master the strategic pillars of modern Enterprise AI Agent Architecture:

- AI Agent Orchestration: When to choose CrewAI (Role-based) vs. LangGraph (State-based).

- Connectivity: Leveraging the Model Context Protocol (MCP) for universal data access.

- Multi-Agent System Design: Blueprints for scalable, secure agent swarms.

2. The Core Stack: Theory & Architecture

2.1 The "Holy Trinity" of Agent Frameworks

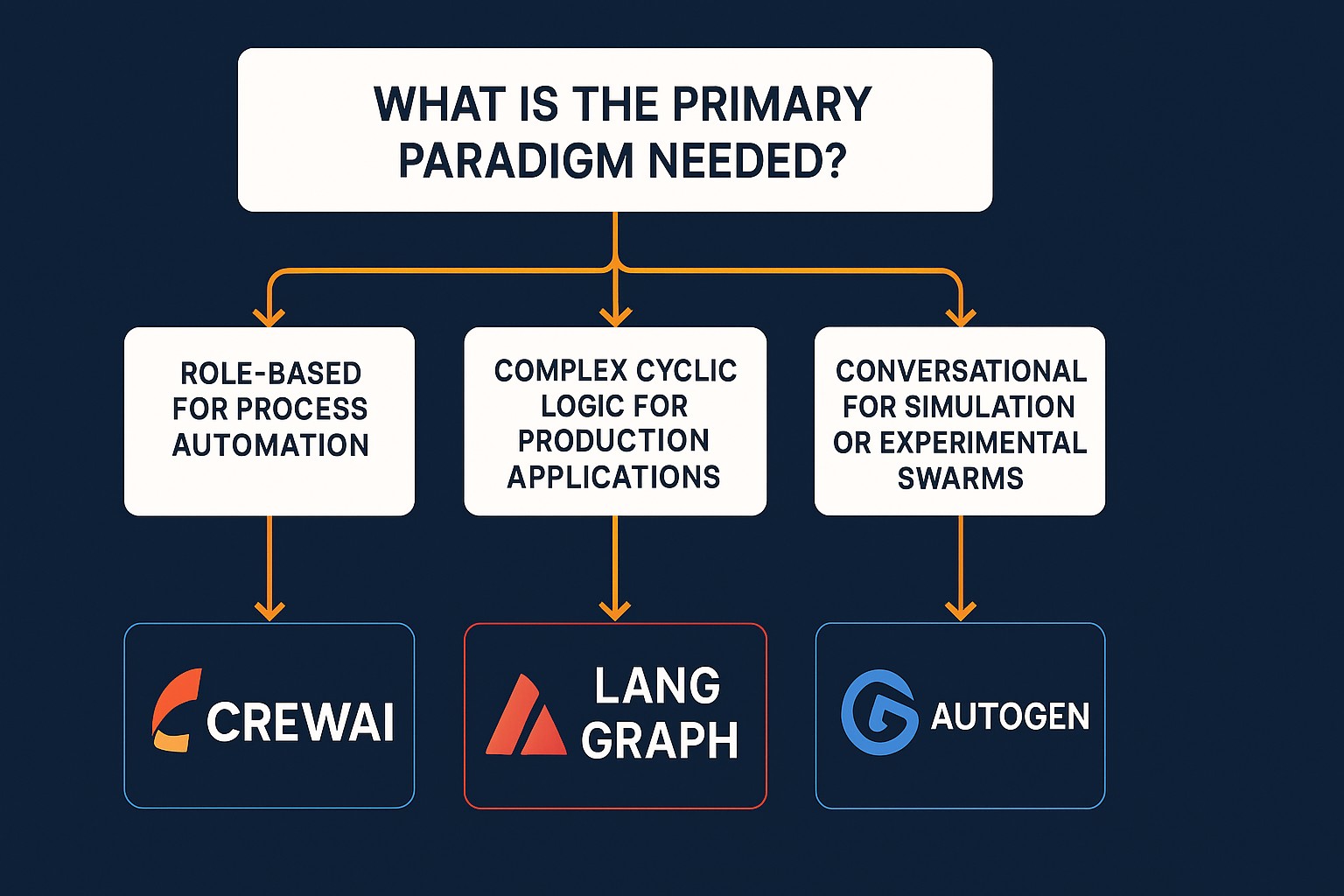

Confusion is high. Should you use AutoGen? CrewAI? LangGraph? We break down the Enterprise AI Architecture trade-offs for decision-makers.

CrewAI vs LangGraph vs AutoGen: A Quick Comparison

| Feature | CrewAI | LangGraph | AutoGen |

|---|---|---|---|

| Paradigm | Role-Based (Manager/Worker) | Graph-Based (Nodes/Edges) | Conversational (Multi-agent Chat) |

| Best For | Process automation, Research teams | Production apps, Cyclic Logic | Simulation, Experimental swarms |

| Learning Curve | Low (Pythonic, intuitive) | High (Requires state management) | Medium |

The Verdict:

- CrewAI vs LangGraph: Choose CrewAI for linear, hierarchical teams. Choose LangGraph for complex, cyclic state management.

- LangGraph vs AutoGen: Use LangGraph for production engineering; use AutoGen for experimental conversational swarms.

Deep Dive: CrewAI vs. LangGraph: A CTO's Guide to Choosing the Right Framework →

2.2 The "USB-C" of AI: Model Context Protocol (MCP) Guide

Building custom API connectors for every tool is unscalable. MCP is the new open standard (backed by Anthropic) that standardizes how agents connect to data sources.

Why it matters: Design one connector for your PostgreSQL database, and any agent (Claude, ChatGPT, or your custom bot) can query it safely.

Concept Guide: Understanding the MCP Architecture for Enterprise Data →

3. The Architect’s Track: 8 System Blueprints

We have structured this curriculum into three "Tiers" of complexity, mirroring a real-world engineering evolution.

Tier 1: The "AI-Augmented Engineer" (Personal Productivity)

Goal: Automate daily friction.

The Career Digital Twin

- The Design Challenge: How to structure a RAG (Retrieval Augmented Generation) system that represents your professional history without hallucinating skills you don't have.

- Recommended Stack: Vector Database (Pinecone) + OpenAI Assistants API

- Architecture Focus: Data ingestion pipelines and context-window management.

Read the Digital Twin System Design →

The Browser Operator Agent

- The Design Challenge: Safely giving an LLM control over a web browser (Computer Use) without security risks.

- Recommended Stack: LangGraph + Playwright integration

- Global Use Case: Automating SaaS procurement workflows and "ClickOps" tasks.

Explore the Browser Operator Workflow →

Tier 2: Financial Intelligence Systems

Goal: High-stakes decision-making with multi-modal data.

The Deep Research Analyst

- The Design Challenge: Preventing "Rabbit Holes." How to structure a recursive agent loop that knows when it has enough information to stop searching.

- Recommended Stack: Recursive Search Loops + Citation Validation Layers

- Outcome: A blueprint for a system that produces "McKinsey-grade" market research reports.

Architecting the Deep Research Logic →

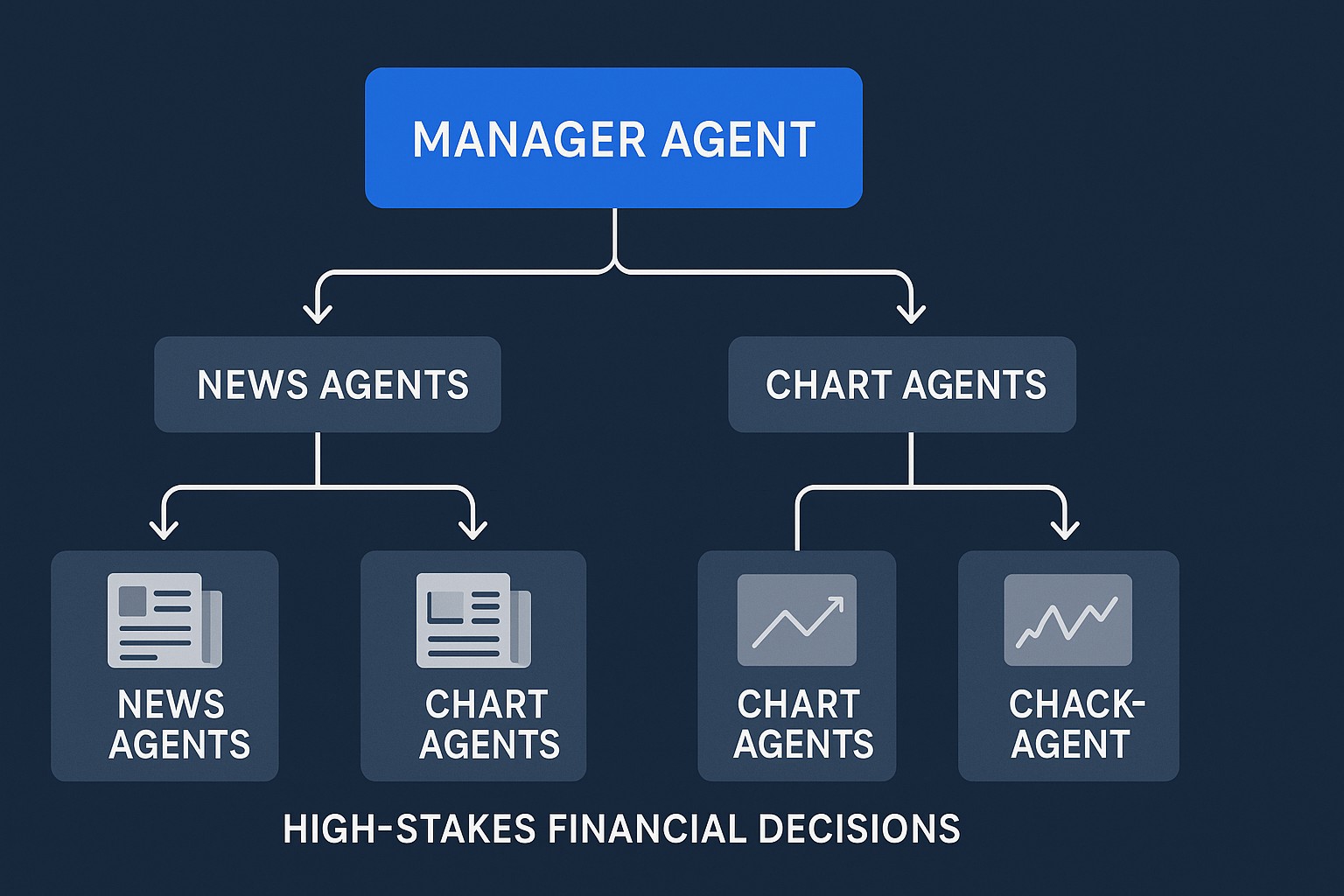

The "Wall Street" Sentiment Analyzer

- The Design Challenge: Real-time data ingestion and conflict resolution between agents (e.g., when "Chart Agent" says Buy, but "News Agent" says Sell).

- Recommended Stack: CrewAI Hierarchical Process + AlphaVantage APIs

- Architecture Focus: Event-driven architecture for financial signals.

View the Financial Swarm Blueprint →

Capstone – The Sovereign Trading Terminal

- The Design Challenge: The ultimate integration test. Designing 4 Autonomous AI Agents communicating via 6 distinct MCP Servers (File System, SQLite, Brave Search, TimeAPI).

- Recommended Stack: Anthropic MCP SDK + Multi-Agent Orchestration

- Outcome: A reference architecture for a local, privacy-first financial terminal.

Analyze the Capstone Architecture →

Tier 3: Enterprise Scalability & Governance

Goal: Production-grade systems for the Fortune 500.

The Autonomous SDR (Sales) Force

- The Design Challenge: Managing rate limits, PII (Personally Identifiable Information) protection, and email domain reputation in an automated loop.

- Recommended Stack: CrewAI + Gmail API + CRM Integration

- Architecture Focus: Human-in-the-loop AI workflows checkpoints for email approval.

Designing the Autonomous Sales Pipeline →

The "DevOps Squad" (Sandboxed AI Environments)

- The Design Challenge: Safety. How to architect a system where AI writes/executes code without destroying your production environment.

- Recommended Stack: Docker Containers for ephemeral Sandboxed AI Environments.

- Global Use Case: Designing an open-source alternative to "Devin" for automated bug patching.

Secure DevOps Environment Patterns →

The Meta-Agent (Self-Replicating Systems)

- The Design Challenge: Using AutoGen to create an agent capable of writing the configuration files for other agents.

- Recommended Stack: Meta-Prompting + Jinja2 Templating + AutoGen

- The Future: Conceptualizing systems that scale their own workforce based on ticket volume.

The Hardware Foundation: TPU vs GPU Optimization

- The Design Challenge: Deciding between the raw flexibility of Nvidia H100s and the cost-efficiency of Google Cloud TPUs for serving agent models.

- Recommended Stack: Google Cloud TPU v5e + vLLM (PagedAttention)

- Architecture Focus: Optimizing "Tokens-Per-Dollar" for high-volume agentic workloads.

Read the Hardware Migration Guide →

4. Production Readiness Checklist

Before you approve any of these architectures for production, ensure you have addressed:

- Observability: How will you trace agent "thought chains" (using tools like LangSmith)?

- Cost Control: Have you designed hard limits on token usage loops to prevent "runaway agents"?

- Human-in-the-Loop (HITL): Does your architecture allow a human to override high-stakes actions?

5. Frequently Asked Questions (FAQ)

A: Generative AI is passive; it waits for a prompt and produces text or an image. Agentic AI is active and goal-oriented. An agent is given a broad objective, and it autonomously breaks that goal into sub-tasks, uses tools, executes actions, and iterates without constant human intervention.

A: Code libraries like LangChain or CrewAI update almost weekly, rendering static code snippets obsolete. However, Enterprise AI Architecture patterns—like "RAG Pipelines" and "Orchestration Layers"—are evergreen. This handbook teaches you how to think like an AI Architect.

A: Choose CrewAI for role-based teams needing fast deployment. Choose LangGraph if your Multi-Agent System Design requires complex state management and cyclic logic (loops).

A: Safety must be architected into the system. We recommend running code in Docker containers to create ephemeral Sandboxed AI Environments and using a Human-in-the-loop AI workflow for high-stakes actions.

6. Sources & References

This handbook synthesizes architectural patterns from the official documentation of the world’s leading AI frameworks and standards.

Core Frameworks & Documentation

- CrewAI: Official Documentation & Architecture

- LangGraph: LangChain AI Documentation

- Microsoft AutoGen: Microsoft Research GitHub

Standards & Protocols

- Model Context Protocol (MCP): Official MCP Documentation

- Docker for AI: GenAI Stack with Docker

Seminal Engineering Reading

- "The Rise of the AI Engineer" by Latent Space: Read the Essay

- "Building LLM Applications for Production" by Chip Huyen: Read the Guide

- "Agentic AI Trends": McKinsey Technology Trends Outlook

Community & Tools

- LangSmith: Observability Platform

- Ollama: Local LLM Inference